T3CON Recap—AI Demystified: Sustainable and Intelligent Best Practices for Artificial Intelligence

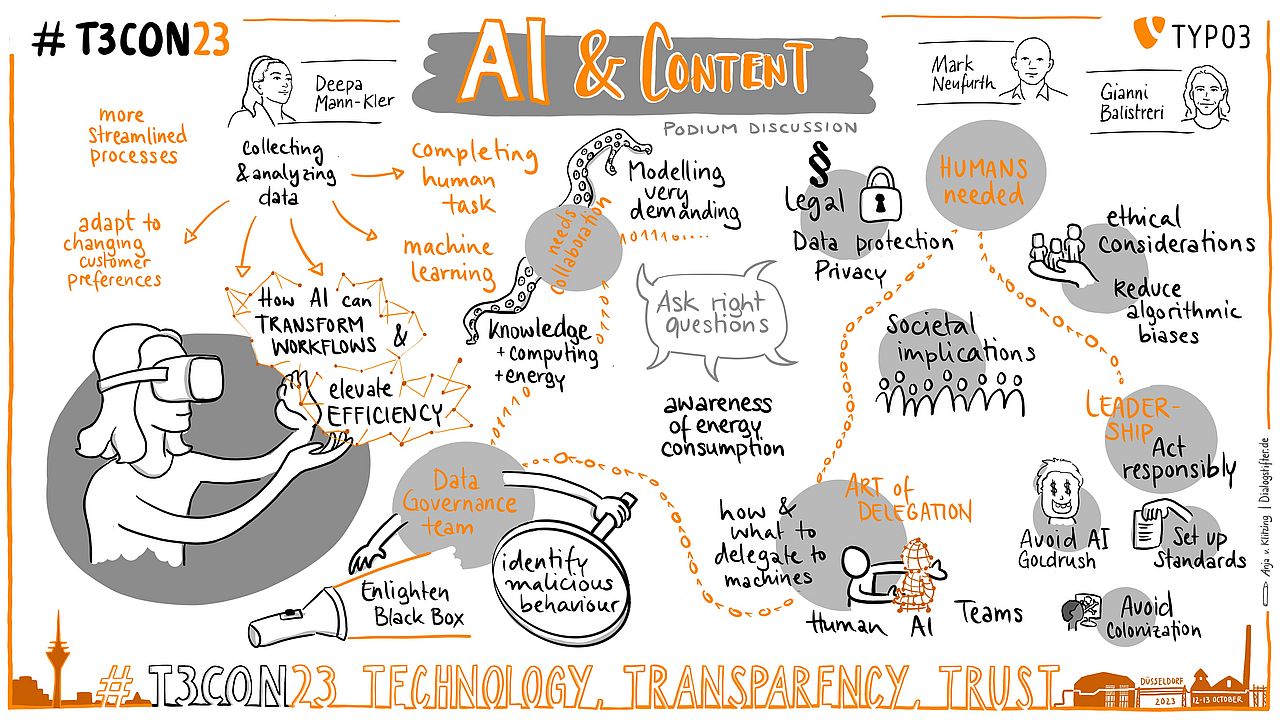

Is artificial intelligence our doom or our door to an ideal future? And how do we get time to make the right choices when innovation happens at break-neck speed? At T3CON23, thought leader Deepa Mann-Kler, hosting expert Mark Neufurth, and AI engineer Gianni Balistreri discussed both the immense potential and the great challenges we’re facing with this rapidly evolving technology.

The conversation dispelled misconceptions, uncovered uncomfortable facts, and exemplified best practices. Recognizing the human factor and the importance of good governance, openness, and collaboration may be the key to using AI intelligently and sustainably.

Read on for a full recap of this podium discussion, or catch up on what else you missed at T3CON23 and get ready for T3CON24.

Table of content

AI for the repetitive, complex, and routine

Avoiding the AI gold rush

Four challenges to AI success

Need for real-time bias detection

Contingencies for unexpected AI failures

A call for responsible AI policies

Addressing AI ethics and compliance at Shopware

Negative impact of AI on society and environment

Scanning the AI horizon, learning from past mistakes

The future is not predetermined dystopian or ideal

AI for the repetitive, complex, and routine

Deepa Mann-Kler started out by demystifying AI and highlighting some of its potential positive effects. She began the conversation by explaining how AI has been a game changer for collecting and analyzing data. “At the heart of AI is data,” she said. “The more complete the data on which an AI is trained, the more valuable the output.” However, the idea of data itself is nothing new, she explained: “The Ishango bone is thought to be one of the earliest pieces of evidence of prehistoric data storage. Paleolithic people used tally sticks to record trading activity and keep track of food supplies.”

“We already use AI every day,” Mann-Kler continued. “Even if we don't always realize that companies across many industries are leveraging it to refine workflows and improve efficiencies.” She gave five examples illustrating this fact:

- “Amazon uses AI in its warehouses to optimize logistics. Robotics, powered by AI, assist in sorting, packing, and shipping parcels, making the entire supply chain more efficient. […] Scientists at Amazon Technologies in Berlin use AI to flag defective products before they ship. […] Every single item that passes through their warehouse is scanned, and this system is three times more effective than manually identifying damaged products.”

- “Adobe Sensei uses AI for customer experiences and creativity. It is used by analysts, marketers, creatives and advertisers to streamline end-to-end workflows from ideas through to production. And with image discovery and manipulation, Sensei recognizes pictures in the Adobe Creative Cloud media library. It then suggests tags that identify elements within the images, such as faces or buildings, which makes categorization and searching easier.”

- “Uber uses AI to detect fraud, identify risks, match riders with drivers, track and optimize routes, dynamic pricing, demand forecasting, user locations, traffic patterns, and historical ride information.”

- “Siemens uses AI in manufacturing for predictive maintenance. By analyzing its sensor data from machinery, the company can predict when equipment is likely to fail, enabling proactive maintenance and reducing downtime.”

- “Netflix invests heavily in machine learning, and it uses viewing history preferences and ratings to suggest movies and TV shows. The company operates in over 190 countries and uses AI translation and localization to translate content into multiple languages, including subtitles and voice overs.”

Mann-Kler also made it clear that AI does not mean no humans are involved: “These models are helpful, but sometimes they get fooled.” Using the example of Amazon, she explained that the people who act as Damage Experts “directly teach the AI how to make better decisions in the future. It is this collaboration between humans and machines that leads to much better results for our customers.”

Mann-Kler concluded that AI is best for completing repetitive, complex, and routine human tasks. “It frees humans to focus on creativity and strategy,” she said.

Avoiding the AI gold rush

Mann-Kler underscored that not everything can or should be automated with AI: “Some workflows require human judgment, creativity, or empathy. And some involve sensitive or confidential data that needs to be protected. And some workflows have ethical or legal implications.”

Mann-Kler said we have to take great care to avoid the AI gold rush, saying AI “has to be implemented responsibly by addressing ethical considerations, data privacy, and potential biases.” The technology has to employ algorithms that ensure it is balanced and sustainable.

Again, artificial intelligence can’t work without humans. She explained that “AI implementation involves a combination of conscious, thoughtful practices, ethical considerations, and ongoing vigilance.” The frameworks must be built to prioritize fairness, transparency, and accountability, as well as standards:

“Industry standards and guidelines are essential to AI development and deployment. AI models must be trained ethically on diverse and representative datasets to reduce biases.”

– Deepa Mann-Kler, Neon

Four challenges to AI success

Later in the session, Mark Neufurth brought another dimension to the discussion of the AI gold rush. He explained how AI is indeed a true game changer, but that it is necessary that we ask “how to make it feasible, how to make it affordable, and how to make it usable for our customers.”

Thinking from the customers’ perspective, Neufurth listed four challenges to AI success:

- Knowledge. “Do you comprehend what this modeling is all about? […] AI and machine learning is something for the educated and the adults,” he remarked, and continued by explaining that we’re talking about an elite. There are few people with the knowledge necessary to work with AI. “You need mathematics. You need statistics. You need extensive knowledge of both fields. […] There are only a few people capable of working with AI modeling. […] So you can’t scale.”

- Money. Neufurth told the story of how machine learning had existed for a decade when OpenAI and GPT came along. “Microsoft speculated and invested heavily into this tooling. It was a bet on the future. But we [others] don't have the money to bet on the future.”

- Ownership. A customer or company won’t have the competence to build their own foundation models, “there are legal aspects, sovereignty aspects, of course, and sustainability aspects. And last but not least, economic aspects,” Neufurth said. “We are betting heavily on open source models because we don't want to become dependent on only a couple of oligopolistic vendors in the market from the United States or China.”

- Complexity. Neufurth’s final point was that different customers will have different needs. Some “don’t want to occupy themself with AI on a daily basis,” while others need hosting services for AI solutions “built by the customers themselves.”

Need for real-time bias detection

Balanced and sustainable AI is not only about being a conscious and ethical human being. It also involves the question of who created the AI model. Deepa Mann-Kler quoted founder of Women in AI, Moojan Asghari: “Artificial intelligence will reflect the values of its creators. AI uses the data that its creator feeds it and will pass on the biases of its creators.”

She explained that this “is why we can't leave this work to just technologists, who are mostly male. We need to de-bias algorithms […]. These tools must detect and mitigate bias in real time.”

This has also been shown through the research of Joy Buolamwini, founder of the Algorithmic Justice League, which Mann-Kler pointed to: “Even though AI can automate many tasks. Human judgment is crucial in the areas of ethical, legal, or societal implications. Judgments come with responsibilities and responsibility lies with humans at the end of the day.”

"We are betting heavily on open source models because we don't want to become dependent on only a couple of oligopolistic vendors in the market from the United States or China."

– Mark Neufurth, IONOS

Contingencies for unexpected AI failures

“How do we know we are doing things right?” Mann-Kler asked, rhetorically. “By conducting regular audits to identify and rectify issues, and implement continuous monitoring and assessment mechanisms to ensure alignment with ethical standards and regulatory requirements. This has to involve internal and external stakeholders, employees, customers, and relevant communities in the AI development processes.”

This means AI models must be explainable — which would open them up for feedback, transparent communication, and addressing concerns about the intended use and impact of AI technologies.

Mann-Kler also voiced concern that legislative frameworks will never protect perfectly and technology malfunction is always a possibility: “While legislators and legislation are still playing catch up with technology, and when national borders no longer apply, we still have to adhere to relevant regulations and legal frameworks. AI systems must comply with standards related to data protection, privacy, and other industry-specific requirements. We have to anticipate and develop redundancy and contingency plans in case of unexpected AI failures.”

A call for responsible AI policies

Mann-Kler was clear that artificial intelligence requires a collective effort. “We must encourage openness and collaboration by sharing insights, best practices, and lessons learned, to collectively address challenges and foster a culture of responsible AI development.”

According to Mann-Kler, a responsible AI policy should:

- Align with organizational values, mission, principles, and objectives.

- Establish oversight procedures for reviewing and approving AI systems before deployment.

- Detail proper data collection, storage usage, and deletion protocols.

- Include considerations around consent, privacy, security, and data biases.

Addressing AI ethics and compliance at Shopware

Gianni Balistreri added perspectives on how Shopware is meeting the challenges of ethics and compliance in connection with their ecommerce software.

“Data scientists and data engineers are not only building black boxes that only they can understand. Often not even they can understand them, because they’re so complex.”

– Gianni Balistreri, Shopware

To address this problem, Gianni implemented three things:

“One thing is a Data Governance team. So we can monitor what the AI system does, and what the outcome looks like, and prevent malicious behavior. The second thing is to ‘enlighten the black box,’ so to speak. […] You can take several steps to understand why this AI system does what it does. And if you can understand it, and if something goes wrong, you can interfere. Thirdly, we have a full list of policies for how to improve security and make sure that the data that we have is securely stored. […] So it is important for us to make sure it is anonymized. And even if the hackers get into our system, they cannot use the data because they cannot connect it with other people.”

Negative impact of AI on society and environment

Mann-Kler went on to discuss the potentially disastrous societal effects of AI, both when distorting and magnifying biases, as well as when tested on those without the choice to opt out, concluding that “we all have a responsibility in how we share our future visions of AI.”

“Timnit Gebru, director of the Distributed AI Research Institute, says researchers, including many women of color, have been saying for years that these systems interact differently with people of color,” Mann-Kler explained. “Her colleagues have also expressed concern about the exploitation of heavily surveilled and low-wage workers who helped support AI systems through content, moderation, and data annotators. They are often coming from poor and underserved communities like refugees and prison populations. And content moderators in Kenya experienced severe trauma, anxiety, and depression from watching videos of child sexual abuse, murders, rapes, and suicide in order to train ChatGPT on what is explicit content; they were paid less than €1.25 an hour.”

Some of the positive impacts of AI also have negative side effects. Microsoft increased its worldwide water consumption by 34% (1.7 billion gallons or 6.4 billion liters) in 2022, as a result of increased AI training. During the same period, Google used 5.6 billion gallons (21.2 billion liters) of water, a 20% increase also attributable to machine learning. In 2023, AI data centers exacerbated a drought in Iowa.

“I would ask myself, even if I'm working in this space, is there a better way of doing what I've been doing? Whose voice is not in the room? Who can I include? What don't I know?"

Deepa Mann-Kler, Neon

Scanning the AI horizon, learning from past mistakes

All three panelists commented on the differences in response to AI. Balisteri pointed out that the approach to AI is different between countries and continents: “In my opinion, especially in Germany and in Europe, we are afraid of this technology. […] And other countries, such as the US, Canada, Israel, and China, have another kind of mentality. With these new technologies that are fast-paced, you have to have the mentality where you do some experiments and see what happens.”

For Mark Neufurth, this difference could be both good and bad: “In Europe, we are rather passive, looking at it rather tentatively. […] That is good […] because we are thinking twice about things. On the other hand, in terms of platform economies, an established platform could evolve that dominates everything and that nobody can interfere with anymore.”

Deepa Mann-Kler said we should learn from past mistakes.

“I would ask myself,” she said, “even if I'm working in this space, is there a better way of doing what I've been doing? Whose voice is not in the room? Who can I include? What don't I know? I would be taking a break to think about AI. Within the context of everything it touches — horizon scanning — what are the potential implications? What are the potential unintended consequences? What are the risks?”

The future is not predetermined dystopian or ideal

Deepa Mann-Kler ended by commenting on the tendency to provide an “extreme dystopian doomsday narrative of the future where AI destroys the human species.”

She quoted farsight expert Alex Fergnani, who writes, “public intellectuals should impartially discuss multiple images of the future to teach the public that the future is not predetermined. They should also meticulously examine the visions of the future they present, taking into account the emotional load they carry, in order to steer clear of fear-mongering or excessive idealization. Additionally, it is crucial for them to ensure that these visions are not influenced by fleeting trends and immediate events.”

Mann-Kler elaborated: “When printing was invented, people were afraid we would lose our memories. When bicycles were invented, people were afraid that it would encourage sexual promiscuity. And when steam trains were invented, people were worried that they would move so fast that we would all break our bones.”

Building a great solution takes time. “What tech is made, who makes it, and how it is used is something we should all have a say in.”

Ensure to sign up for T3CON24 to see more amazing talks like this one.