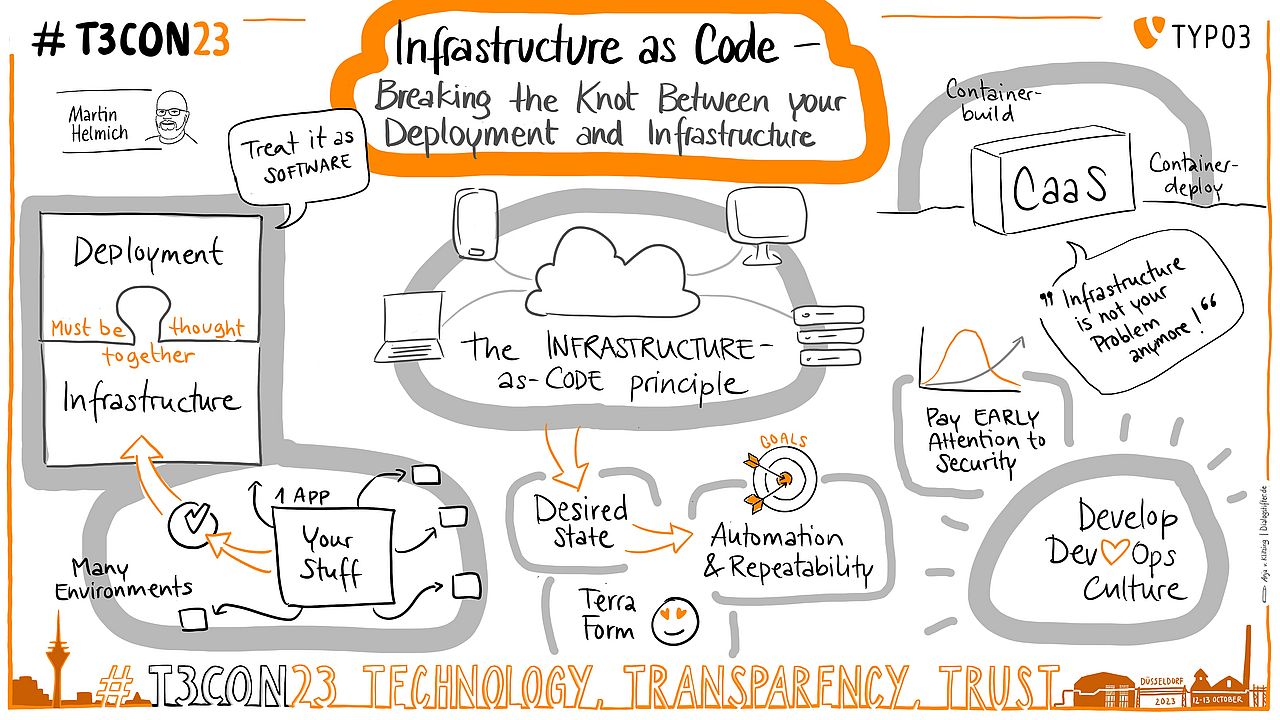

T3CON23 Recap—Infrastructure as Code

Do you or your developers still set up your infrastructure manually? Is the deployment process a fragile construction of scripts, clicks, and ad-hoc commands? According to Martin Helmich, it’s time for automation.

In his talk Infrastructure as Code at T3CON23, Martin explored the interdependency of web application deployment and backend infrastructure, and how automation has changed how web applications get deployed.

Missed T3CON23? No worries. This article is part of our recap series, and T3CON24 is less than a year away.

A lesson on the history of code deployment

20 years ago, deployment processes were arduous and highly manual. Martin remembers how he deployed a TYPO3 project from a local installation to the production server, including FTP uploads and manual editing of files. This worked out fine, as long as Martin was the only person involved in the project. “I noticed quite quickly why this wasn't a good idea,” he recalled. “Especially when you're working on projects with multiple people.”

Fast-forward 10 years, to 2013. At a conference, Martin presented his state-of-the-art deployment process that included version control, code review, CI/CD systems, and an early, pre-1.0 version of Surf (a package still in use today). Inspired by the Ruby tool Capistrano, Surf implements atomic deployment, ensuring that an application cannot be deployed in a partially updated state. In a nutshell, the tool uploads a new version of an application to a new directory and then updates symbolic links to point to the directories and files of the new version.

Atomic deployments quickly became mainstream, allowing you to immediately revert to a previous release if things went wrong.

If you’re not yet using any deployment tools, Martin recommended starting with Surf or the PHP tool Deployer.

The relationship between deployment and infrastructure

Martin explained that automated deployments became a must with the rise of agile methodologies. With the mantra of release early and often, deployment tools like Surf or Deployer solved a common problem: Bridging the gap between the development and production environments.

As applications grew larger, development became more complicated, and deployment had to cater for this complexity. “Typically, if you have an application, or if you have a web product, you don't deploy it to a single piece of infrastructure. In many cases, you have many environments. You have a production environment. Maybe you have an acceptance environment that you give your customer. Then we have a test integration environment. And don't even get me started on individual development environments”, Martin said.

In a multi-environment scenario, deployments must be consistent across environments. This is an entirely new situation, Martin pointed out. Software developers can no longer focus solely on an application itself. They have to ensure that the application behaves exactly the same way regardless of the environment it is deployed to.

How to ensure this consistency? The easiest way is to deploy environments just like applications.

“I think my main point for today is that we shouldn't just talk about deployment and infrastructure, but we should talk about deploying your infrastructure.”

– Martin Helmich

The best practice for software deployment is that it is automated and repeatable. Martin describes what can happen if you don’t automate: “Imagine you're trying to roll out a TYPO3 application manually. You might connect to a server via SSH, do a Git pull and a Composer update, and then run scripts to do your database migrations.”

“If you do this in a production environment and forget one of those steps, then — oh, no! — you have an internal server error. Boom. The customer calls you, and they’re not happy.”

This is why modern software development uses automated deployments. Setting up infrastructure manually is as prone to errors as deploying software manually. That’s why Martin is passionate about Infrastructure as Code — infrastructure that can be deployed like software

How does Infrastructure as Code work?

If you describe the requirements of an application in words, you would say something like, “I need an Apache web server with PHP 8.2 and a MySQL 8.2.0 database.” With Infrastructure as Code, you formalize this description into a declarative specification that can be put into action. Tools can read the specification and compare the desired state of the infrastructure setup with the actual one. If the specification is updated, the tools can determine what needs to be changed and apply these changes.

“The big advantage, if you put your infrastructure specification into code,” Martin explains, “is that you can treat it just like your software. […] You put it into version control, you can do code reviews, you can do static code analysis, you can do continuous integration, you can also do continuous delivery, you can roll these changes out automatically.” The goal is to have the same degree of automation and reproducibility as with software.

The right tools for Infrastructure as Code

The infrastructure landscape is wide and varied. You can choose to run servers on-premise or buy infrastructure from vendors. “And there are different offerings that range from Platform-as-a-Service [PaaS] to Infrastructure-as-a-Service [IaaS] offerings, where you have a gradually greater degree of control over your infrastructure”, Martin explained. The best option for you usually means prioritizing control or convenience.

When you choose a vendor, you normally use their tooling by default. However, there are several vendor-independent tools available for infrastructure management. Terraform and Docker are two examples of tools that turn your infrastructure requirements into textual descriptions for easy automation.

Terraform: Deploy your infrastructure with a single command

Rooted in the cloud-native ecosystem, Terraform (or its open-source fork OpenTofu) orchestrates infrastructure based on a description language called HCL. Martin described how it works: “You have a machine-readable text file. [...] You describe the desired state of your infrastructure; for example: I want to have an AWS instance using the following image with the following amount of resources. And then [...] you can say

terraform apply,

and TerraForm will run the API on all your vendors and provision these infrastructure items as required.” If the description changes, Terraform updates the infrastructure accordingly.

To address the diversity of today’s infrastructure landscape, Terraform has a plugin framework. Vendors who want to make their infrastructure available to Terraform can write custom plugins, and if you need a custom plugin that does not exist yet, writing one is straightforward.

Martin wrote a Terraform provider for his company, Mittwald, as a proof of concept. “Turns out it's not that hard”, he concludes. With this plugin, a Terraform user can include resources from the Mittwald cloud platform into their infrastructure.

With Terraform and similar tools, infrastructure can be deployed and managed just like applications. But with this model, you are still deploying the infrastructure first and the application second. Ideally, you could deploy an application with all the required infrastructure in a single step. Enter: containers.

Docker: Give your app a replicable environment

The container technology lets you package an application and all of its requirements into a deployable image, including operating-system-specific shared libraries or auxiliary binaries.

Compared to fully-fledged virtual machines, which are typically the basis of a PaaS offering, containers “give you a higher level of control over your infrastructure than traditional Platform-as-a-Service offerings, but you do not have that many problems you have to worry about, like with regular infrastructure deployments,” Martin pointed out.

The most prominent container system is Docker. Here is how it works:

In a text file called Dockerfile, you specify how your environment shall be built, and Docker turns this into a container image. Then, tools like Docker Compose let you describe, in a purely declarative way, how your containers shall be started and configured. The Dockerfile and the docker-compose file are text files that allow integrating the infrastructure deployment process into CI/CD pipelines and managing it like a software deployment.

The big advantage of containers is that all the application’s requirements are placed inside replicable container images. The target platforms only need to know how to run containers.

“So you basically split your infrastructure into multiple parts, and you take the parts that are of concern for you, like the required environments that your application needs to run. That's your problem. That's part of your deployment artifact when working with containers, and the rest of your infrastructure is not your problem anymore. All you need is an infrastructure that is able to run these containers”, Martin explained.

There are, however, types of resources that cannot be put into a container, such as managed DNS or mail services. Tools like Terraform are still useful for integrating resources like these.

Containers allow us to integrate operational concerns into a classic setup. For example, security patch management can be made part of the standard deployment process. And now you can automate security management, for example, by adding a security scanner to the build pipeline.

Infrastructure can, and should, be treated like code

Automating deployment can help de-silo the development and operation teams, reduce friction, and shorten development cycles — getting your product to market sooner. As Martin pointed out, “If we're doing Agile, we're iterating quickly. And this also needs to apply to our infrastructure.”

The Infrastructure as Code approach is suitable for any kind of organization. The actual implementation depends on factors like your service level or how much of your infrastructure you manage yourself. Whenever you have a continuous delivery process for your software, you can easily integrate your infrastructure setup into the same process and manage applications and infrastructure closely together.

A range of tools are available for managing infrastructure as code. “There is no silver bullet”, Martin warned. “There’s no one right answer.” Choose the tools that fit your needs and use cases best. Terraform and Docker are two examples of how to turn infrastructure requirements into textual descriptions for easy automation. Treat your infrastructure as software and reap all the benefits you’ve come to rely on from modern software deployment: automation, replicability, and flexibility. As Martin suggested, “Try to make it as easily repeatable as you can.”

Ensure to sign up for T3CON24 to see more amazing talks like this one.