TYPO3 on AWS - Scalability and Performance

Basic Issue

In the previous articles, I discussed the benefits of AWS and outlined the key migration strategies. I also provided an overview of security in the context of AWS. Once the infrastructure has been defined and all relevant security aspects have been taken into account, further adjustments to TYPO3 may be necessary. This is especially true if you want to automatically scale the Web servers and ensure optimum performance. Since TYPO3 is not cloud-ready by default, a number of precautions and modifications must be taken prior to the migration.

Architecture

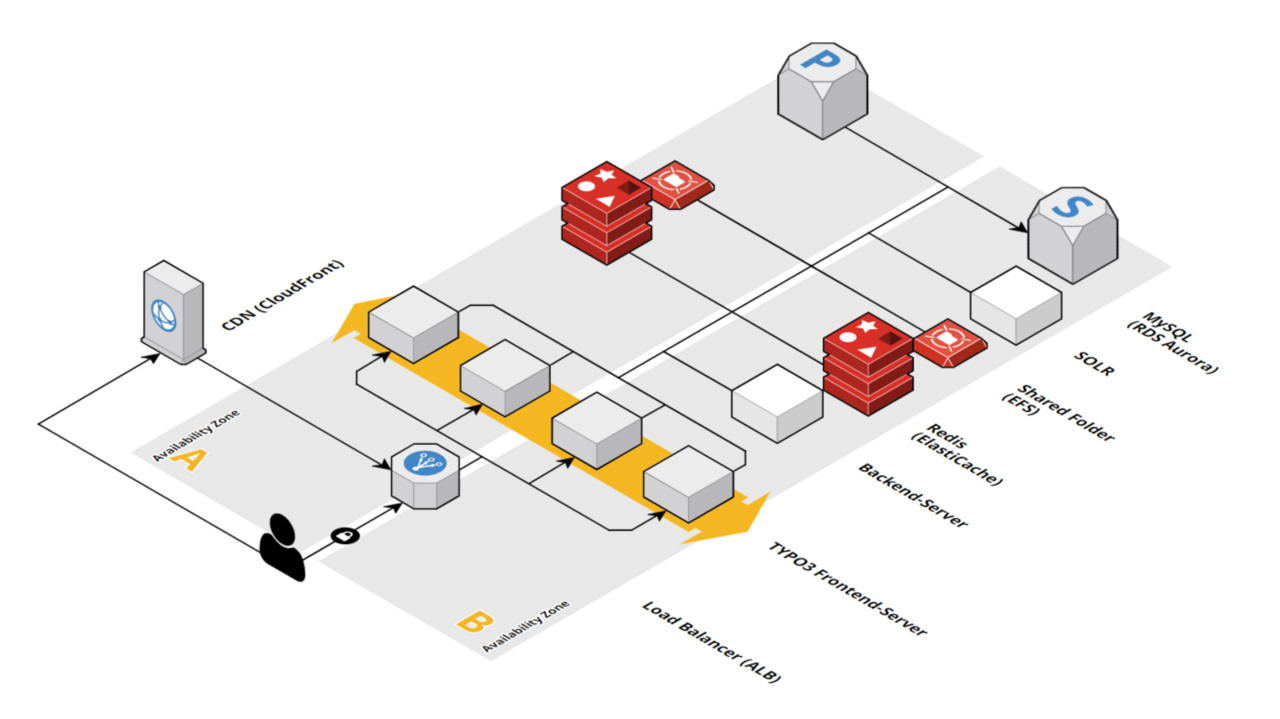

The starting point for the following considerations is our previously featured AWS infrastructure from the second part of my series of articles (which can be found at this link). This AWS environment consists of two availability zones and several services: the core of the environment is the EC2 autoscaling group, which ensures the resiliency and high availability of the front-end. In addition, most components are redundant (database, redis). The TYPO3 must be adapted with regard to the infrastructure, especially the redundant web servers.

Adjustments in TYPO3

In our experience, the TYPO3 temp files cause the most problems in a multi-server setup. The typo3temp/ folder (or var/ folder, depending on whether there is a composer or non-composer-installation) contains both files that are important for the local cache and files that are shared between the EC2 instances and should therefore not be stored locally on an instance. The latter can cause data inconsistencies between the instances, so TYPO3 works optimally only on the instance where the temp files are stored locally.

A plausible solution would be to outsource these files to your Shared-Storage (NFS/EFS). However, the disadvantage of the NFS/EFS is that performance suffers at high load. NFS/EFS IOPS is based on the size of the storage volume (for example, for AWS, for GP2 volumes, the IOPS is 300 per 100 GB). Too many accesses can quickly deplete the volume's IOPS. In addition, access to shared storage involves a slight network latency, which is noticeable at a high request volume on the TYPO3. For these reasons, the cache can be significantly slowed down and thus no longer serves its purpose.

It is therefore a good idea to store everything that does not need to be shared locally so that the problems mentioned do not arise. Nevertheless, in a multi-server setup, dynamic files must be stored in a shared storage. This includes, for example, the images in the typo3temp/assets/folder and the fileadmin/ directory . Static files in directories such as typo3conf/ext/ or typo3conf/l10n/ can be deployed on all nodes. The file admin, to give another example, contains images and files that are within the so-called “editor realm”, that is to say, files that all instances must access. An identical data material on all EC2 instances is required here.

By virtue of making necessary files available on a NFS/EFS, one could assume that everything is prepared for scaling. However, you should make sure that only the necessary dynamic files have been stored on a NFS/EFS. I give you an example of how not to do it based on log files: If the log files are on the EFS, only one server can access them at a time, not multiple servers at the same time. Rather, a log file is written through one server and only then can the next server access it to avoid data inconsistencies. As a result, the fastest server can log first. In the worst case, this can cause the log file to add the log message of less busy servers first, even though they became active later. Here is an illustrative example:

Server 1 gets Request 10:53 and is under heavy load

Server 2 gets Request 10:54 and has no load

Log file in the worst case:

10:51 Logmessage: all good

10:52 Logmessage: all good

10:54 Logmessage from Server 2

10:53 Logmessage from Server 1

Logs should therefore be offloaded to a different storage, such as ELS or graylog. At root360 we store all logs on a separate EBS-Volume which is attached to a separate Bastion Host.

Moreover, when offloading files to the NFS/EFS, it is recommended to use a locking mechanism so that the instances do not get in their way when using/editing the files. There are different locking strategies. Due to locking on NFS/EFS is not reliably working, the following TYPO3 extension should be used using AWS ElastiCache for Redis: https://github.com/b13/distributed-locks

Moreover, performance can significantly benefit from implementing a cache system. A central caching via a redis, as shown in the architectural sketch, is therefore useful. In addition, with a multi-server setup, the sessions must be stored centrally, for which the redis can also be used. A clean separation of the caches from each other and the sessions can be achieved by several databases within the Redis server. An example of configuring the redis is presented in this blog post: https://blog.herrhansen.com/redis-cache-typo3/

Our architecture sketch still includes a separate EC2 instance as a backend server. We generally recommend separating the frontend and backend. This can serve a variety of purposes. For example, the backend can control cron jobs at the system level and perform tedious and resource-intensive tasks, such as indexing, imports/exports separately from the frontend servers, and thus do not affect the availability and performance of the website.

If you have actively opted against a backend server, you are faced with the problem in an autoscaling group that several instances have configured the same cron job through deployment (I will talk about deployment in the next paragraph). This results in each instance within the autoscaling group running the same cron job at the same time. An example of this problem is birthday greetings sent by email. If you have 4 instances in the autoscaling group, 4 birthday mails will be sent to the customer in the worst case. As root360, we have therefore worked out a solution: we provide root360 script snippets for this purpose, which are used to ensure that only one server performs a cron job. This avoids that all servers run the cron at the same time, but only the first one in the respective list. An explanation of the snippet can be found in our root360 knowledge base at the following link: https://root360.atlassian.net/wiki/spaces/KB/pages/66337718/Scripts+Snippets#run-once-per-role.sh

In the previous section, I had already briefly referred to the deployment. This is particularly important when running a multi-server-setup to ensure that all servers within the autoscaling group are provided with the same release. For this topic, we offer a ready-made solution as root360 that allows you to pull your release directly from your Git or Continuous Integration System and distribute and install it from there to the individual instances. In an intermediate step, the release is stored in an S3 bucket. If autoscaling becomes active and an additional instance needs to be provisioned, the release of the last deployment is automatically dragged from there and installed on the new instance. During deployment, for example, database users, database hosts, etc. can be set dynamically to provision a fully functional TYPO3 application. If you want to know more about deployment and what options we have, you can find more information here: https://root360.atlassian.net/wiki/spaces/KB/pages/66154327/Quickstart#Deployment

Caching

Finally, here are some tips on optimizing performance using the Solr and a suitable cache system.

In order to have a quick search in TYPO3, you can use a Solr. For the Solr we usually include a separate EC2 instance. This “Solr product” prepared by us includes the Solr pre-installed on the EC2 instance. The necessary extensions, such as the Solr extension with the necessary configurations, will also be deployed to this instance. Likewise, as root360 we install the Solr TYPO3 plugin (https://github.com/TYPO3-Solr/solr-typo3-plugin), so you don't have to worry about it either.

It is still good to always have a cache system in front of the application servers. If a cache is upstream of the servers, the servers are relieved. This can be a CDN like CloudFront, for example, and a Varnish can also be used as a cache. You can store both static HTTP pages and media files in CloudFront. For the Varnish we have prepared ready-made configurations for TYPO3. For this we evaluated the TYPO3-Varnish moc and prepared it for the Varnish accordingly. We also use the Varnish as a redundant component to increase availability. Through our root360 configuration management, we ensure that the varnish is configured the same way in all Availability Zones. This ensures both increased availability of the Varnish and an increase in performance.

Comments

No Comments